| Markov Models |

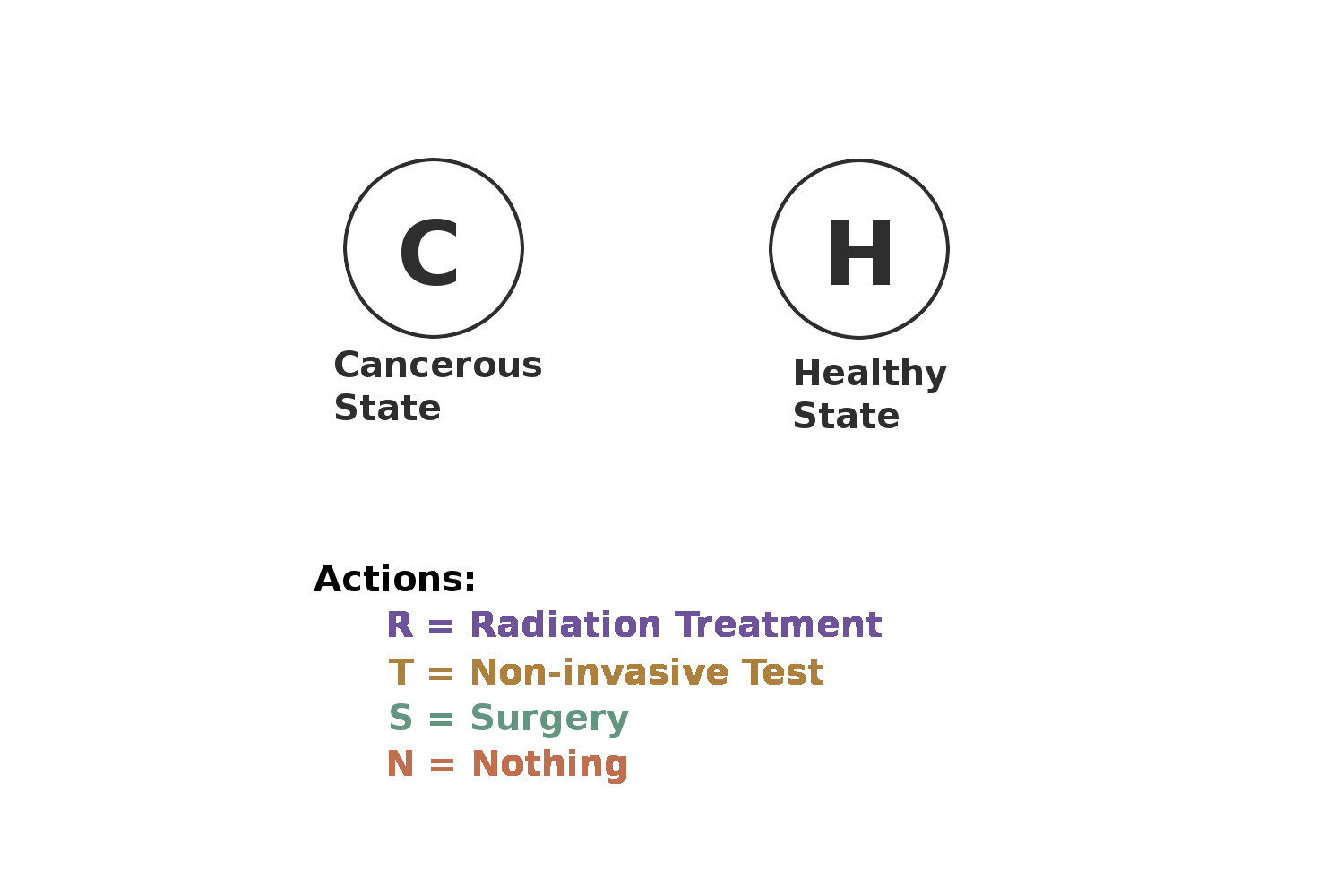

Do we have control over the state transitions? |

||

|---|---|---|---|

| NO | YES | ||

| Are the states completely observable? |

YES | Markov Chain |

MDPMarkov Decision Process |

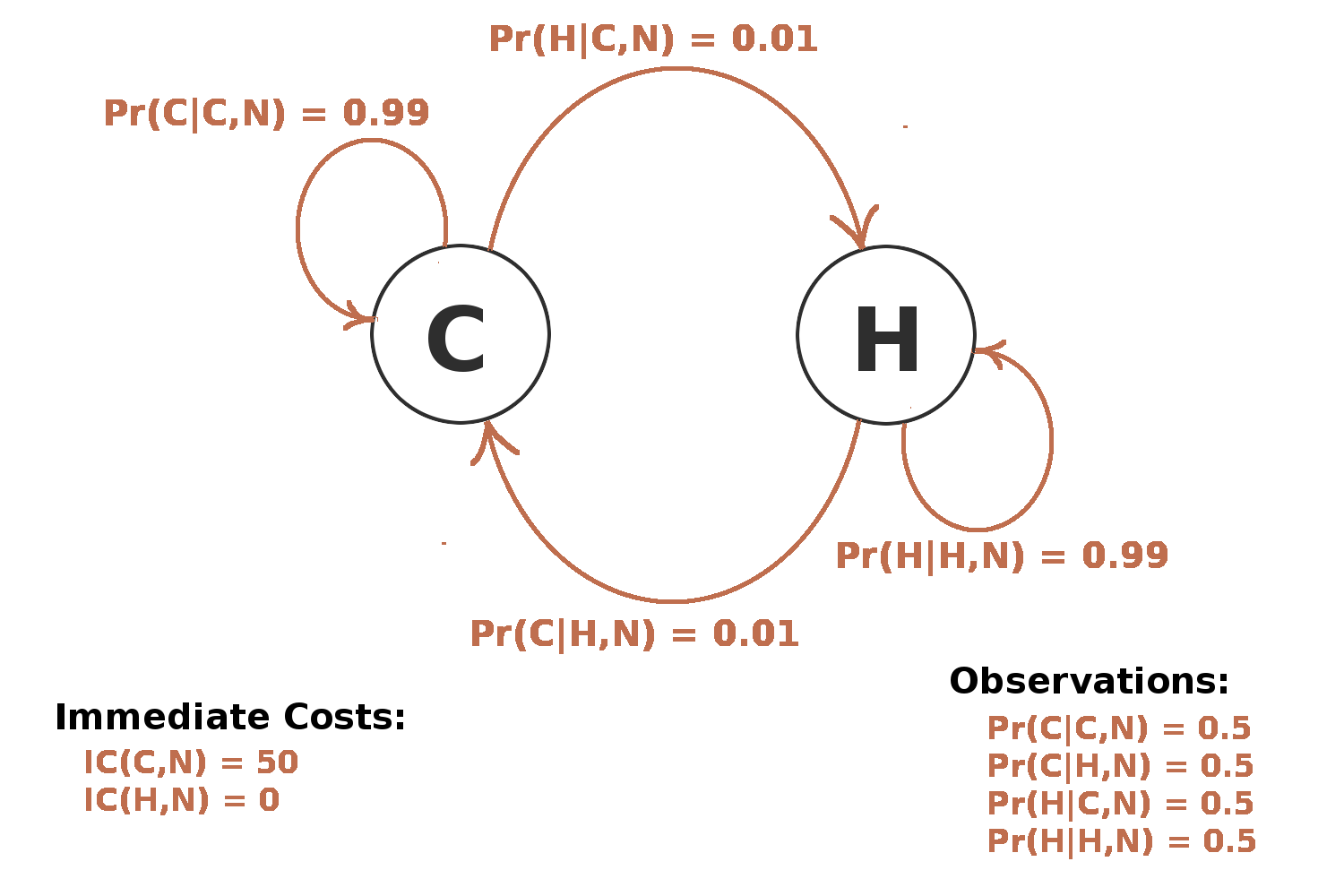

| NO | HMMHidden Markov Model |

POMDPPartially ObservableMarkov Decision Process |

|